GNNs as Adapters for LLMs on Text-Attributed Graphs

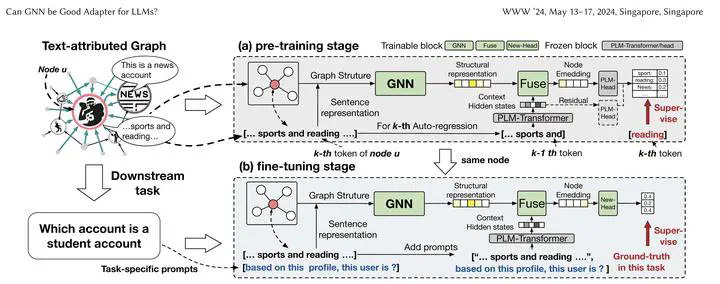

Framework of GraphAdapter.

Framework of GraphAdapter.Abstract

Text-attributed Graphs (TAGs), which interlace textual information with graph structures, pose unique challenges and opportunities for joint text and graph modeling. Recently, large language models (LLMs) have greatly advanced the generative and predictive power of text modeling. However, existing research on jointly modeling text and graph structures either incurs high computational costs or offers limited representational power. In this work, we propose GraphAdapter to harness the power of the LLM without fine-tuning its weights on Text-Attributed Graphs. Given a TAG, an adapter GNN is trained to reduce the LLM’s error in predicting the next word of text sequences on nodes. Once trained, this GNN adapter can be seamlessly fine-tuned for various downstream tasks. Through extensive node classification experiments across multiple domains, GraphAdapter demonstrates an average improvement of 5% while being more computationally efficient than baselines. We further validate its effectiveness with various language models, including RoBERTa, GPT-2, and Llama 2.